December 29, 2025

⯀

18

min

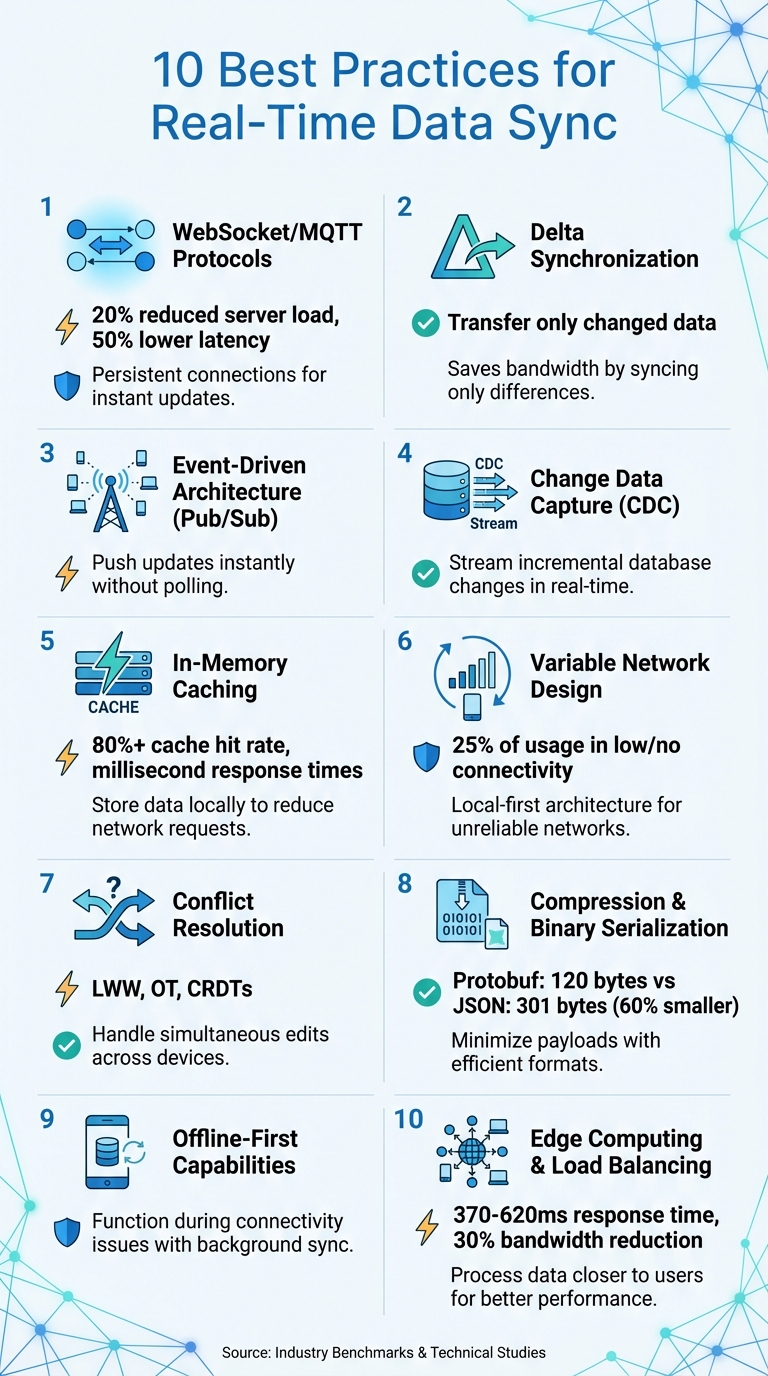

Real-time data sync ensures instant updates across devices, improving user experience and system performance. This article covers ten key practices to build reliable, efficient sync systems for mobile apps:

These strategies help apps remain responsive, reduce server load, and handle network challenges effectively.

10 Best Practices for Real-Time Data Synchronization in Mobile Apps

When using traditional HTTP, a new connection is opened for every request, which can introduce delays. WebSockets and MQTT solve this issue by maintaining a single, persistent connection. This allows updates to be pushed instantly to devices without the need for repeated polling. WebSockets support full-duplex communication, meaning data can flow in both directions at the same time. On the other hand, MQTT employs a lightweight publish-subscribe model, making it perfect for sending messages to a large number of devices. The design of these protocols also plays a key role in how efficiently data is handled.

One of MQTT's standout features is its fixed header, which is only 2 bytes. Compare that to HTTP headers, which often exceed 800 bytes. This makes MQTT especially valuable for mobile networks, where minimizing data usage is critical. Switching from HTTP polling to WebSockets can reduce server load by up to 20% and cut latency by approximately 50%. Additionally, MQTT offers three levels of Quality of Service (QoS): 0 (at most once), 1 (at least once), and 2 (exactly once). These options ensure reliable message delivery, even when dealing with unstable connections. This reliability is particularly important when adapting to the challenges of mobile networks.

Mobile devices often switch between networks, such as Wi-Fi and cellular data. MQTT handles these transitions smoothly by using persistent sessions, which significantly cut down reconnection times after temporary network disruptions. Another advantage is that MQTT can improve battery life by 25%–30% during frequent updates.

When choosing between these protocols, consider the use case. WebSockets are ideal for scenarios requiring continuous, high-frequency data streams, like real-time chat or collaborative editing, where fast, bidirectional communication is key. On the other hand, MQTT shines in lightweight messaging over unreliable or low-bandwidth mobile networks, especially when battery efficiency is a priority. Regardless of the protocol, always ensure secure connections (e.g., using wss:// for WebSockets) to safeguard data.

Delta synchronization focuses on transferring only the data that's changed since the last sync, instead of sending the entire dataset each time. Imagine updating a single photo in your library without having to re-download the whole collection - that’s the essence of this method. It’s a smart way to cut down on bandwidth usage, which is a big deal for users on limited or metered mobile data plans.

"App Services does not re-upload the entire object. Instead, App Services sends only the difference ('delta') between before and after." - MongoDB Docs

The process typically involves using a timestamp or a sync token to pinpoint changed records. These changes are sent as "changesets", which are granular updates. MongoDB Atlas Device Sync takes it a step further by utilizing zlib compression for these deltas, shrinking payload sizes to suit mobile networks. This combination ensures syncing is both reliable and scalable.

With delta sync, the server shoulders the responsibility of identifying changes. It creates and maintains a "Delta table", essentially a log of missed events for clients that were offline. When a user reconnects, the system provides only the logged updates instead of querying the entire database. To prevent the log from growing too large, you can implement Time-to-Live (TTL) settings, which automatically clean up older entries after a set period.

For scenarios requiring high reliability, soft deletes can be employed. Instead of permanently removing records, an is_deleted flag is used, allowing offline clients to recognize deletions during their next sync. Advanced approaches like Operational Transformation or CRDTs are often used to handle complex merges.

Delta sync shines in low-bandwidth environments. By transmitting only small payloads, it ensures updates are quick and require less processing power, even on unstable connections. Pairing delta sync with resumable sessions - enhanced by exponential backoff and retry mechanisms - helps maintain app reliability during network interruptions or carrier transitions. This adaptability makes it an excellent choice for mobile-first applications.

An event-driven Pub/Sub architecture pushes updates instantly, eliminating the need for constant polling and cutting down on delays. This push-based model not only reduces latency but also supports systems that need to scale efficiently.

Using asynchronous communication, producers can send messages without waiting for consumers to process them. This setup removes bottlenecks and allows interfaces to update almost instantly, even while backend validations are still running. By decoupling components through shared topics, producers can operate at full capacity without being tied to consumer performance.

Pub/Sub systems excel at scaling horizontally. Each subscriber manages its own state, so adding more consumers doesn’t disrupt the existing workload.

"In the pubsub model, the messaging system doesn't need to know about any of the subscribers... Instead, the subscribers track which messages have been received and are responsible for self-managing load levels and scaling." - Google Cloud Documentation

Take systems like Cloud Firestore, for example. They use fan-out mechanisms to distribute a single data change to millions of users at once. To ensure reliability, Pub/Sub offers delivery guarantees, such as at-least-once or exactly-once delivery. It also uses dead-letter queues to handle failed messages, preventing errors from halting the entire synchronization process.

For mobile apps running on unreliable networks, combining Pub/Sub with MQTT can be a game-changer. MQTT uses less bandwidth than HTTP, making it ideal for low-throughput conditions, even when signal strength appears sufficient.

Keeping a stream history is equally important. By logging events, offline devices can replay missed updates once they reconnect. Subscriber flow control, like capping the number of outstanding messages or limiting message size, further ensures smooth performance when devices come back online.

Dots Mobile (https://dots-mobile.com) applies these methods to deliver seamless real-time synchronization, regardless of network conditions.

Building on the efficiency of delta synchronization, Change Data Capture (CDC) takes things a step further by capturing and streaming incremental database changes. This approach gives you more precise control over data transfer.

CDC focuses on tracking database changes - like inserts, updates, and deletes - and sends only these incremental updates to your mobile app. By avoiding the need to transfer entire datasets, CDC drastically reduces bandwidth usage. This can be a game changer for mobile devices, especially for users dealing with limited data plans or unreliable connections.

"CDC helps systems propagate only what changed - fast and efficiently." - Rajas Abhyankar, Author

The most efficient way to implement CDC is through a log-based approach. By reading the database's write-ahead logs (WAL or binlog), this method skips the extra overhead of querying tables or setting up triggers. The result? Near-real-time updates that ensure mobile users have up-to-date information, without waiting for traditional batch ETL processes that might run hourly or even daily.

Once the changes are captured efficiently, the system must also handle scaling and reliability to maintain smooth operation.

CDC systems often rely on a message broker, like Kafka or Redpanda, to act as a middle layer between the database and mobile clients. This setup is key for managing spikes in data volume and ensuring smooth data delivery, even when network conditions are unpredictable. The message broker also tracks offsets, allowing data delivery to resume exactly where it left off if a connection drops.

To keep things running smoothly, ensure that your mobile app's database can handle idempotent updates. This prevents duplicate data from being processed. Additionally, monitor replication lag - metrics like kafka_consumergroup_lag can help you ensure that your synchronization pipeline keeps up with incoming changes.

CDC's efficiency shines on unreliable mobile networks because it transmits only the incremental changes instead of full datasets. When paired with compression, it further reduces the size of data payloads. If connectivity drops during periods of high database activity, the CDC system queues the changes and processes them as the network recovers. For instance, if one hour of database activity takes 15 minutes to process, a four-hour outage would require about 80 minutes to fully catch up. Monitoring this lag helps you understand any delays mobile clients may experience during connectivity issues.

In-memory caching plays a key role in ensuring updates reach users with minimal lag. By storing frequently accessed data locally - either in memory or on-device storage - your app can skip repetitive network requests. Paired with protocol tweaks and delta synchronization, this approach significantly cuts down response times.

Fetching data directly from on-device storage is lightning-fast, returning results in milliseconds compared to the much slower API calls, which can take hundreds of milliseconds. This difference becomes even more noticeable in unreliable network conditions, often called "Lie-Fi."

"A query against an on-device database can return results in milliseconds; an API call may take hundreds. The result? A snappy, responsive UI powered by a local store feels instantly modern." – Sudhir Mangla, Mobile Architect

By adopting a local-first approach, your app can deliver immediate UI responses by pulling data straight from the cache. Meanwhile, a background sync engine handles slower network updates. This setup allows for optimistic UI updates, where user changes appear instantly - a feature that’s critical since 75% of users expect apps to react immediately.

Caching isn’t just about speed - it also bolsters system stability. Client-side caching ensures low-latency access and offline functionality, while remote caching helps maintain consistency across devices. A well-implemented caching strategy can achieve a cache hit rate of over 80%.

The choice of caching pattern depends on your data needs. For example:

To avoid stale data, always set a Time-to-Live (TTL) for cache entries and use an eviction policy like Least Recently Used (LRU) to free up memory when necessary.

In-memory caching shines when network conditions are unpredictable. By checking the cache first, your app can use delta synchronization to fetch only the updated segments, avoiding the need to re-download entire datasets. This keeps the app responsive, even during connectivity hiccups.

To further optimize, adjust prefetching based on the network type - this minimizes energy consumption and shortens transfer times. A network-aware caching strategy is invaluable when dealing with intermittent connectivity.

As mobile networks are inherently unpredictable, designing for these conditions is essential. Users frequently move between 5G, LTE, and unreliable Wi‑Fi connections, with about 25% of usage occurring in low or no connectivity environments. And then there’s the tricky problem of "Lie‑Fi" - those moments when signal bars appear, but performance suffers due to poor throughput, latency spikes, or dropped requests.

A local-first architecture is a strong defense against network inconsistency. By treating the on-device database (like Room, SQLite, or Realm) as the single source of truth, your app ensures that the user interface (UI) operates independently of the network. This means the UI interacts only with local data, while a background sync engine handles server updates. The result? A responsive app experience even during connectivity hiccups.

To make this setup even smarter, implement network-aware logic to delay heavy sync operations during poor network conditions. For example, monitor connection types, keep an eye on battery levels, and use tools like Android's WorkManager or iOS BackgroundTasks to schedule non-urgent tasks. These tools can prioritize syncing when the device is charging and connected to Wi‑Fi, ensuring efficient use of resources.

When it comes to data transfer, choosing the right protocols and strategies can make all the difference. For low-bandwidth scenarios, MQTT is a great option, while HTTP/3 (QUIC) helps reduce handshake overhead and handles packet loss more effectively. Battery efficiency is also key - managing radio usage wisely can save power. By bundling data transfers, you can reduce radio "tail time", which conserves battery life. Additionally, using binary serialization formats like Protocol Buffers or MessagePack minimizes payload sizes, further conserving resources.

"The more disruptive reality is not 'No‑Fi' (complete absence of connectivity) but 'Lie‑Fi' - those frustrating, inconsistent conditions where the phone technically shows signal, but throughput is abysmal, latency is spiky, or requests intermittently drop."

– Sudhir Mangla, Mobile Architect

To prepare for real-world network issues, simulate high latency and packet loss using tools like Charles Proxy or tc. Pair these tests with strategies like exponential backoff and connectivity checks to ensure your app remains efficient and battery-friendly, even under challenging conditions.

When users edit the same data at the same time or across multiple devices, conflicts are bound to happen. Without a solid plan, updates can be lost or become inconsistent, leading to frustrating user experiences.

To tackle this, you need a conflict resolution model that fits your application's needs. For instance, Last-Write-Wins (LWW) takes the update with the latest timestamp. While this works for less critical data, it can lead to issues during near-simultaneous updates or when clocks aren't perfectly in sync. For more complex use cases, Operational Transformation (OT) is a better option. It adjusts concurrent operations so every client ends up with the same final state, making it ideal for collaborative tools like text editors. Another approach is Conflict-Free Replicated Data Types (CRDTs), which automatically merge changes using mathematical rules. This is great for distributed systems, though it can increase data overhead.

Many systems also rely on deterministic rules to handle conflicts. For example, MongoDB Atlas Device Sync uses rules like "Deletes always win" or primary key-based resolution to manage conflicts at scale. When working with counters, avoid overwriting values. Instead, use increment and decrement operations to ensure that updates from multiple users add up correctly.

"Device Sync handles conflict resolution using operational transformation, a set of rules that guarantee strong eventual consistency, meaning all clients' versions will eventually converge to identical states." – MongoDB

Be cautious of "ping-pong" effects, where updates endlessly trigger new updates in a loop. Chromium's sync documentation emphasizes this: "An incoming sync update must never directly lead to an outgoing update". If you need to re-upload merged data after resolving conflicts, limit how often this happens - perhaps only once per app startup - to avoid overloading your server. Additionally, pair your conflict resolution strategy with optimistic UI updates. These updates show changes immediately while validation happens in the background, keeping your app fast and responsive even during network hiccups.

Dots Mobile applies these strategies to ensure reliable, real-time data synchronization for its users.

When it comes to packing data efficiently, binary formats like Protobuf and FBE outperform text-based formats such as JSON. For instance, Protobuf can shrink a message down to just 120 bytes, whereas the same data in JSON would take up 301 bytes. Why? Binary formats encode field IDs and data types into a single byte, while JSON redundantly sends long field names like "email" over and over again. This compact structure is especially crucial for mobile apps, where users often deal with unreliable network connections or limited data plans. A smaller payload means faster data transfer and lays the groundwork for blazing serialization speeds.

Binary serialization doesn't just save space - it also saves time. Take FBE, for example: it handles serialization in a mere 66 nanoseconds, compared to JSON's 740 nanoseconds. That’s an 11× speed improvement.

"String/textual encoding requires more bits to store, and the process of actually encoding/decoding your data to/from a string is expensive." – Jimmy Lee

For even greater speed, formats like Simple Binary Encoding (SBE) or Cap'n Proto use zero-copy techniques. These formats align the in-memory data layout with the byte sequence sent over the network, eliminating the need for extra encoding or decoding. This makes them perfect for scenarios that demand high-frequency data updates, such as financial dashboards or live collaboration tools.

The benefits go beyond speed - binary serialization also boosts scalability. By reducing CPU usage, servers can handle more simultaneous sync sessions. This efficiency translates to smoother performance for users and less strain on infrastructure.

Another key advantage is versioning support. As your app grows and users operate on different versions, formats like Protobuf and FBE ensure seamless communication with the server. This prevents sync disruptions when handling mixed-version clients. Additionally, implementing rate limits for re-uploading merged data - like restricting it to once per app startup - can avoid server overload caused by infinite update loops.

One of the trickiest issues for mobile apps isn't a total loss of connectivity - it’s something called "Lie-Fi." That’s when your device shows signal bars, but the connection is weak, slow, or unreliable. An offline-first approach tackles this head-on by making the local database the central hub for your app. The app's interface directly interacts with on-device storage, while a sync engine quietly handles network communication in the background. This setup works hand-in-hand with incremental sync methods, ensuring only small amounts of data are transferred during unstable connections.

Delta synchronization is a game changer - it sends only the changes made since the last update, reducing both bandwidth usage and server strain. To make this even better, tools like Android’s WorkManager or iOS BackgroundTasks can keep sync operations running, even if the app is closed or the device restarts. These tools can be set to work under ideal conditions, like when the device is connected to unmetered WiFi, which helps save battery life and data. Together, these strategies ensure smooth and efficient data handling, even when network conditions aren’t ideal.

Accessing data stored locally is lightning-fast - results come back in milliseconds, compared to the slower response times of API calls. By using optimistic updates, your app can instantly reflect user actions on the device, while the sync engine updates the server in the background when connectivity is restored. This approach avoids data loss during interruptions and maintains consistency between the app and the server. It’s a critical feature, especially since about 25% of mobile app usage happens in low or no-connectivity situations. Combining local data handling with smart sync operations ensures a smooth, real-time experience for users.

At Dots Mobile (https://dots-mobile.com), we use these offline-first strategies to create scalable, reliable apps that work seamlessly - even when network conditions are less than perfect.

Edge computing shifts data processing closer to users, cutting down the distance data needs to travel. Instead of routing every request through a central data center, edge nodes are positioned just one or two network hops away from the user. This setup achieves response times between 370–620 milliseconds, ensuring updates happen almost instantly. This is especially critical for mobile apps, as nearly 60% of users uninstall apps due to slow performance. By 2025, Gartner estimates that 75% of enterprise-generated data will be created and processed outside of traditional data centers, making edge infrastructure a key player in modern systems.

Processing data at the edge doesn’t just improve speed - it also trims bandwidth costs. By handling and filtering data locally, only essential information is sent to the cloud, reducing data transfer by up to 30% when paired with optimized, minimal API responses for mobile devices. Using binary protocols with Gzip can further shrink edge-to-mobile payloads. WebSocket connections at the edge slash communication overhead by 70% compared to standard HTTP requests, making real-time synchronization far more efficient. Load balancing complements these advances by evenly distributing traffic, ensuring smoother operations.

Edge computing and load balancers work together to manage synchronization traffic across multiple nodes, preventing any single server from becoming overwhelmed. This horizontal scaling approach can handle millions of simultaneous users by spreading the workload evenly. When rolling out new features, follow the "500/50/5" rule: start with 500 operations per second, then increase by 50% every 5 minutes to avoid bottlenecks. Additionally, deploying Multi-AZ (Availability Zone) configurations ensures automatic failover in case of regional outages.

Edge nodes remain operational even when cloud connectivity falters, which is crucial since about 25% of mobile usage happens in low or no-connectivity environments. This localized processing addresses "Lie-Fi" issues - when devices show signal bars but the connection is unreliable. Placing databases closer to users further reduces latency. The upcoming rollout of 5G networks in 2025 will amplify these benefits, enabling near-instantaneous data synchronization when paired with edge infrastructure.

At Dots Mobile (https://dots-mobile.com), we design apps that leverage edge computing and load balancing to ensure fast, reliable real-time synchronization - regardless of where your users are or the network conditions they face. This approach builds on earlier strategies to provide a seamless and dependable real-time experience in any environment.

Creating reliable real-time sync in mobile apps requires a thoughtful, multi-layered approach that addresses connectivity, performance, and scalability. The ten best practices we've discussed combine to build systems that stay responsive no matter the network conditions. As mobile architect Sudhir Mangla aptly states, "The network becomes a companion, not a crutch". By treating the on-device database as the Single Source of Truth, apps can remain functional and responsive, even in challenging "Lie-Fi" or "No-Fi" scenarios.

Techniques like delta sync, binary compression, WebSocket protocols, and conflict resolution ensure users stay engaged, even when network reliability dips. Meanwhile, event-driven architecture and edge computing support scalability, and strategies like the "500/50/5" rule and CQRS patterns optimize performance for both reading and writing operations.

These methods also help conserve device resources. For instance, using compression algorithms and WebSocket connections reduces data transfer overhead, which is critical given that 25% of mobile usage happens in low or no-connectivity environments. Features like exponential backoff for retries and reactive observers further ensure that apps handle network issues gracefully without draining battery life.

At Dots Mobile, we incorporate these best practices into every mobile app development project. From backend development to quality assurance, we ensure real-time features work seamlessly across iOS and Android platforms. Our team leverages tools like Swift, Kotlin, and Python to implement the synchronization strategies outlined here. Whether you're building AI-powered fitness apps or enterprise solutions demanding real-time data consistency, we deliver scalable, user-friendly apps designed to perform under any conditions.

These strategies not only address current challenges but also prepare your app for future advancements. With trends like 5G-powered sync, AI-driven conflict resolution, and edge computing on the horizon, real-time synchronization will continue to evolve. By adopting these practices today, you're not just meeting user expectations for immediate feedback - you're laying the groundwork for scalable, forward-thinking solutions that will keep your app ahead of the curve.

Delta synchronization streamlines data syncing by sending only the changes (or deltas) made since the last update, rather than transmitting the entire dataset. This approach minimizes the volume of data transferred, saving bandwidth and making the process faster.

By offloading much of the heavy lifting to the backend, delta sync keeps mobile apps running smoothly and efficiently - even when managing large datasets or handling frequent updates.

WebSocket and MQTT are two protocols designed for low-latency, two-way communication, making them excellent choices for real-time data synchronization. Both are optimized for lightweight messaging, which helps reduce bandwidth usage and ensures dependable performance, even on less stable networks.

Thanks to their ability to enable quick and efficient data exchange, these protocols are particularly well-suited for mobile apps that require real-time updates without interruptions. By using WebSocket or MQTT, developers can build apps that not only provide smooth and responsive user experiences but also maintain reliable data consistency.

Conflict resolution plays a critical role in real-time data synchronization, ensuring that all users see consistent and accurate data, even when updates are made simultaneously or while devices are offline. Without effective conflict resolution, users could face problems like outdated information, lost updates, or errors - issues that can erode trust in an app.

To address this, developers use methods like last-writer-wins, operational transformation, or CRDT-based merging to preserve data integrity and provide a smooth user experience. This becomes especially crucial for offline-first apps, where devices reconnect and must reconcile changes without introducing disruptions. Dots Mobile leverages reliable conflict-resolution strategies to help businesses build dependable, real-time apps that users can trust.